Getting scientific agreement on relatively straightforward interpretations of data is simple enough. If a panel of experts was asked the question: Is the spread of COVID-19 an outbreak, an epidemic, or a pandemic? The data clearly support the interpretation that the world is facing a pandemic. However, agreement on interpretations of data breaks down quickly when more complex questions are applied to it. One of the best studies illustrating this problem was recently published as it relates to neuroimages.

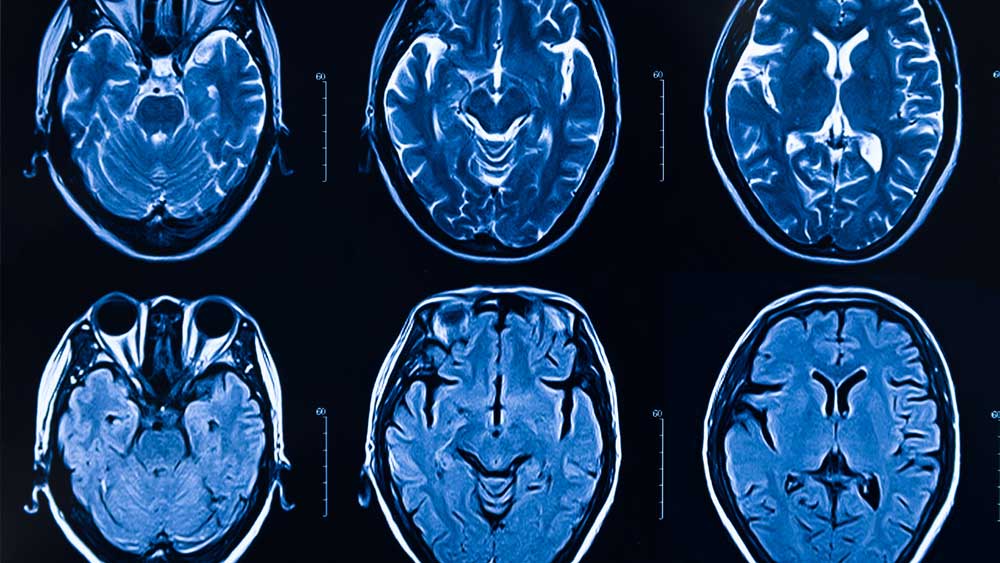

By way of background, a CT scan, magnetic resonance image (MRI), or X-ray taken of the brain or spinal cord is called a neuroimage. Radiologists and others with specialized training interpret what they see to make a diagnosis or give recommendations for treatment. Like all other areas of science, there is plenty of room for interpretation. This study of neuroimaging experts, published in Nature,1 acknowledged that “data analysis workflows in many scientific domains have become increasingly complex and flexible.” But how flexible are the analyses of—and conclusions about—neuroimages? To find out, the researchers initiated a large collaborative project consisting of 70 teams of neuroimaging experts from around the world called the Neuroimaging Analysis Replication and Prediction Study (NARPS).

The teams were given the raw functional MRI data along with the protocols for prior experiments. Each team used one of three different image analysis software packages. But they were free to decide how to analyze the data and to apply their own interpretative methodology in order to test nine hypotheses about specific brain activity. The teams disagreed on interpretations for five out of nine hypotheses. The researchers comment on how complete flexibility by different individuals to interpret the same data can result in diverse conclusions:

Here we assess the effect of this flexibility on the results of functional magnetic resonance imaging by asking 70 independent teams to analyse the same dataset, testing the same 9 ex-ante hypotheses. The flexibility of analytical approaches is exemplified by the fact that no two teams chose identical workflows to analyse the data. This flexibility resulted in sizeable variation in the results of hypothesis tests…[this] variation in reported results was related to several aspects of analysis methodology…[and thus] our findings show that analytical flexibility can have substantial effects on scientific conclusions….

The results do not necessarily imply that any of the different interpretations are wrong. But it does clearly illustrate how highly trained experts can come to conflicting interpretations of the same data, depending on the interpretive framework they use to approach the data.

This fact is very relevant to all areas of science. Let’s briefly consider it as it applies to the controversies surrounding the theory of evolution, the veneration of science in society, and scientific consensus.

Previously, ICR reported on a contentious meeting of the British Academy and the Royal Society held on November 7-9, 2016, focused on reconciling evolutionary theory with contradictory observations and mechanisms of adaptation.2 Interestingly, divisions at the Royal Society along with the study on neuroimaging experts illustrate an important point that creationists have been saying. Evolutionists often claim that they have a mountain of data to support their position while creationists have none. Creationists contend that they have the same data, but interpret it very differently. Similarly, one evolutionist, Kevin Laland of the University of St Andrews, was coming from the minority position at the Royal Society meeting and he observed,

This tension was manifest in the discussions where different interpretations of the same findings were voiced…The conference brought home a key point—these debates are not about data but rather about how findings are interpreted and understood.3

So, it seems that the debate generally isn’t over which side has data. Rather, the debate is about which interpretation better explains the data.

Since different interpretations of data is quite normal in science, then part of the frustration that people feel with the conflicting guidance from COVID-19 experts may stem from inaccurate beliefs about how certain pronouncements by scientists actually are. Many people may be unaware of how often conflicting interpretations of the same data arises between scientists. So, how might they absorb beliefs extolling the pre-eminence of scientific thinking compared to other ways of gaining knowledge? A recent podcast interview by Nature with Mario Livio about his new book, Galileo and the Science Deniers, may give a clue.4 When asked about important lessons that could be gleaned from his book Livio stated,

Well, the real lesson is ‘believe in science.’ It’s not that science is always right, but science has this ability to self-correct. So we have to believe in science, and we have to put the science first, and before any kind of political considerations, conservatism, religious beliefs and things like that. This is a big lesson.

Yet, self-correction is not exclusive to science. Also, scientists could exploit their supposed impartial scientific authority to advance their personal political or religious agendas.

Finally, one may wonder how a true “scientific consensus” can emerge, given that the same data is often interpreted very differently. One might legitimately ask if the consensus is a real unity of interpretation among scientists, or if there might be other factors in play. Perhaps the experiences of people during the COVID-19 pandemic has exposed that claims of scientific consensus are somewhat disingenuous and that the “consensus” was more like “follow the leader.” People got to observe how guidance pronounced by one scientific expert (who is perceived by his or her colleagues as the best in the field) was continually parroted by others possessing expert qualifications. This repetition—or consensus—gave a sense of scientific authority for obeying the guidance. Unfortunately, people were soon informed that the guidance that they were once told to obey was the exact opposite of what they should now be doing. How can they know this? Because the scientific consensus said so.

References

1. Botvinik-Nezer, R. et al. Variability in the analysis of a single neuroimaging dataset by many teams. Published on nature.com May 20, 2020 accessed May 24, 2020.

2. Guliuzza, R. J. Schism in Evolutionary Theory Opens Creationist Opportunity. Creation Science Update. Posted on ICR.org May 18, 2017, accessed May 24, 2020.

3. Laland, K. N. 2017. Schism and Synthesis at the Royal Society. Trends in Ecology & Evolution. 32(5): 316–317

4. Anonymous. Podcast: Galileo and the science deniers, and physicists probe the mysterious pion (Time Marker 16:06). Nature. Posted on nature.com May 6, 2020 accessed May 24, 2020.

*Randy Guliuzza is ICR’s National Representative. He earned his Doctor of Medicine from the University of Minnesota, his Master of Public Health from Harvard University, and served in the U.S. Air Force as 28th Bomb Wing Flight Surgeon and Chief of Aerospace Medicine. Dr. Guliuzza is also a registered Professional Engineer.